Doing SEO does not just mean creating optimized content. That’s just one (essential) part of getting a page to rank #1 in Google search results. If you feel like your efforts are not paying off after generating enough content, it’s time for you to try focusing on the technical SEO issues that may be causing these problems.

Technical SEO is equally important to create strong SEO for your site. Analyzing your website, focusing on the architecture, and make sure that Google can crawl your site properly allows your site to rise in search rankings.

I saw several websites with technical SEO problems and now they are ranking on Google’s first page after solving the issues. Therefore, today I am going to present the most common technical SEO problems. When they are solved, your website will perform better and generate an improvement in organic traffic.

Find and Fix Technical SEO Problems

Before diving into the technical issues and fixes, let’s get an idea about why do you actually want to prioritize technical SEO?

Well, the reason is technical SEO is what lets search engines know that you have a website of high value. Google prioritized web pages with the faster loading speed, responsiveness, easy to navigate, security, etc. So, when Google finds all these technical aspects in your site, this will prompt Google to rank you higher in the SERPs.

In other words, this will ensure satisfying your visitors and improving user engagement for your website. All these can only be possible when you can ensure having a strong technical foundation.

Thus, here, I have discussed the common technical problems that we always don’t care about or overlooked sometimes which ultimately damage your website. So, let’s start discovering them below and fix them soon.

Page Speed

when it comes to ranking on top and ensure user experience, site speed matters the most. If your website takes more than two seconds to load that means it is generating a bad experience and you’ve to be prepared for Google penalty.

In that case, your best standard content won’t make up a higher ranking even the possibility of indexing new pages of your site will be reduced.

You would be working without achieving the objective because a website that does not appear in Google does not generate traffic. If traffic is not generated, your mission will fail.

Now, there are many factors that impact the loading of a website. To find the issues, that are affecting your site speed, first, you should run a speed test. there are several tools out there to help you in this process, such as-

These tools are very useful to help websites determine which aspects are complicating loading. Once you enter the link of your page in this tool and it analyzes it and shares scores of your site’s core web vitals.

So, here are some common things that affect a site’s speed performance.

- Larger Image size

- Large HTML page size

- Redirect chains and loops

- Uncompressed pages, JavaScript, and CSS files

- Uncached JavaScript and CSS files

- Too large and too many JavaScript and CSS total size

- Unminified JavaScript and CSS files

- Slow average document interactive time

How to Solve the Problem?

The above tools will also offer a series of aspects to correct for improving your page speed.

- Optimize Images

- The number of internal redirects

- Enable file compression so that the browser can handle them better and display them faster in response to the user’s request

- Reduce server response time

- Minify Javascript and CSS

These plugins can help you to improve page speed:

- Imagify: This plugin will automatically compress your newly uploaded images along with those which are already in your WordPress media folder. With its triple optimization level, you’ve more control over the quality and size of your site’s images.

- Autoptimize: It is a complete plugin that optimizes your site even better by minifying, compressing, and combining JS, CSS, and HTML so that these files show at the end of HTML and make the site load faster.

- W3 Total Cache: It is one of the best known because, with it, you can not only minify but also take care of your site’s cache. This tool will improve the SEO and user experience of your site by increasing website performance and reducing load times.

Tools for Minifying the CSS and JS Codes to Improve Page Speed:

After compressing the codes, check your website’s loading speed.

Core Web Vitals

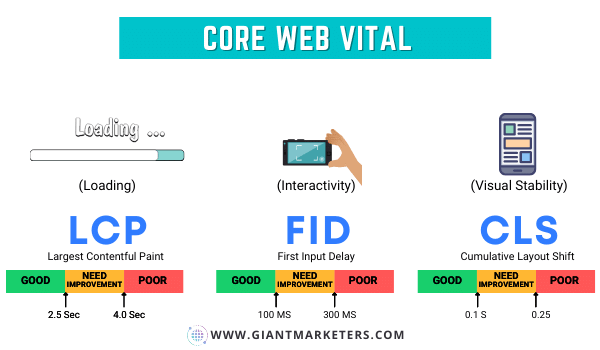

Core Web Vitals is an initiative by Google to ensure a great user experience. According to Google, the current set for 2020 mainly focuses on three aspects of the user experience and these are loading, interactivity, and visual stability.

These three aspects include three metrics like LCP, FID, and CLS which provide unified guidance for quality signals of a site. Google evaluates these metrics to ensure optimum performance and user experience of a site.

If you detect a bad score, you will conclude that we offer a bad user experience. So, let’s see what these three metrics imply.

-

LCP (Largest Contentful Paint):

The Largest Contentful Paint refers to the total loading time of the content. In the range of 2.5-4 seconds, it recommends making improvements and everything that is above 4 seconds must be addressed and modified urgently. This implies you should focus on keeping the LCP score under 2.5 seconds.

-

FID (First Input Delay)

It basically measures the ability of a website to interact quickly. In the range of 100 ms-300 milliseconds, Google will suggest improvements, and everything above 300 seconds is considered really negative, which penalizing the web positioning of that page.

-

CLS (Cumulative Layout Shift)

This metric refers to the level of visual stability of a website. This is probably the most abstract term of the three, so we introduce you to a little explanation. Google considers all CLS correct if it is below the score of 0.1. For above 0.25, we can be talking about pages that need a drastic improvement in your web design.

How to Check and Fix It?

Checking and fixing core web vitals is not that complicated. Use Google PageSpeed Insights to see all the metrics scores. Along with the scores, this tool also shares the reasons which affect your scoring and suggestions to fix them.

Also, Chrome Devtools will help to see the metrics in real-time. If you want automated auditing on core web vitals, try the Lighthouse to check your website’s issues.

By improving your website loading speed and solving detected issues, you can easily fix the core web vitals. Focus on media files and lazy loads of your website. You can easily optimize them using plugins.

Check Redesign and Proper 301 Redirects

In many cases, I find that after a redesign of the web, it has had a drop in organic traffic. Sometimes it drops by around 80% and at other times the traffic drops by 10% or 20%.

New website designs are a double-edged sword at the SEO level. you should alter numerous fractions during redesign your site like coding and include pages. If these are not done right, these can affect the site’s SEO, cause a traffic “crash”, and take months to recover.

And the most common mistake that most site owner does while redesigning their site is forgotten to perform the corresponding 301 redirects from the old URLs to the new ones.

It will lead the search engines to get 404 web pages not found. In these cases, the website’s ranking falls and with it, a large number of visits. Organic traffic can also drop when a lot of text is removed from main pages or categories of topics or products because the new web design is more minimalist.

How to Find It?

It can be easily checked through Google Analytics. If the date on which the website was redesigned and the drop in organic traffic coincide, we will know that we have suffered from this SEO problem.

How to Fix It?

In many cases that I have analyzed, the old URLs have usually been deleted. Therefore 301 redirects cannot be created. But, in other cases, where a CMS has been used, such as WordPress, often they have been left active but not visible.

In that case, by doing 301 redirects, you can redirect the old URL to the new one. You can do this by adding redirects using the .htaccess file, which will look like-

Redirect 301 /oldwebsite HTTP://yoursite.com/new-url

Use Redirection Plugin:

If you don’t want to do this process manually, you can create 301 redirects very easily with the WordPress Redirection plugin.

Here are some essential factors that will help you redesign your site without affecting SEO.

- Backing up the URL structure of your old site.

- Do the redesign on a temporary URL.

- Test the new site for design and redirections, and once everything seems correct switch to the new site.

Then, move to the next steps to optimize your site.

Text to HTML Ratio

If the drop in traffic could be because the text has been removed from the main pages or categories, and the HTML text ratio is very unbalanced, we must study how to put the relevant text back on them.

Let’s learn a little more about this:

- Text Ratio: is the percentage of plain text in a specific URL.

- HTML ratio: is the percentage of CSS code, images, and tags.

What is the Ideal Percentage Between Text and Code On A Web Page?

It is recommended that this ratio be 25/70%. That is, 30% of the page’s content should be plain text and the rest should be add-ons such as images, multimedia, script, CSS. You can check the ratio using code to text ratio checker or Code To Text Ratio.

Optimizing Low HTML to Text Ratio

HTML text ratio or redundant code leads your site to load slower, which in turn affects your ranking negatively. The reason behind this is, Google uses it to view the actual relevancy of a page. That means, the higher the ratio of your site text to code, the better it will be for your top ranking.

- Validate your HTML code, to start you can use W3 HTML Validator.

- Remove all unnecessary HTML code that is not required to display on the page.

- Place the CSS and Javascript in separate files and whenever possible refrain from using inline CSS and Javascript.

- Remove unnecessary white spaces, comments in the code, and avoid using lots of tabs

- Resize images, and remove all the unnecessary ones.

- Make sure to keep the size of your page under 300 kb.

Remember, these steps won’t directly move your ranking to the top position. But, these will definitely help your site to load faster and ensure a better user experience.

Security Issue

Back in 2016, Google announced that it would begin marking any non-HTTPS sites as unsafe or non-secure, especially, if the site accepts any password or credit cards. Even, Google confirmed SSL certificate or HTTPS encryptions are now a ranking signal.

The SSL certificate has little influence on SEO, perhaps a 0.5% total, which is very little. HTTPS is a communication protocol on the Internet that offers safer browsing, improving user data integrity and confidentiality.

Google managed to switch many Websites around the world to this protocol rapidly. Suppose a user were to enter a website from Google or any other means and found the insecure warning.

This could lead users to immediately abandon your site and start to navigate away from your site back to the SERP. As a result, your site will face a high bounce rate and provide a bad user experience.

How to Get It?

The only formal requirement to install an SSL certificate on your company’s website is that the server where you host your website. It allows the installation of SSL certificates.

A website with an SSL certificate will not make you achieve better Google positions, but it does generate trust in its visitors. As once you purchase and install an SSL certificate, your site will be secure.

Check Mobile-friendliness

Google has officially declared in 2018 that it will classify each web page first in its mobile version and then in the PC version. This indicates that – mobile-first. As of September 2021, more than 54.61% of web traffic comes from mobile devices worldwide.

Still, almost 24% of existing web pages are not compatible with mobile devices. Sites that are not compatible with mobile devices have chances to get penalized. Therefore, it’s essential to have a mobile-friendly website than ever.

How to Check Mobile Friendliness?

To know a website is compatible with mobile devices, use the tools:

How to Make Your Site Mobile-Friendly?

The above tools will give you information about which resources you should optimize. You have to try to get the best possible score in them. If you don’t know how to do it, you will have to contact a web developer.

- Choose a responsive WordPress theme and reliable web host.

- Optimize your site’s loading time by implementing caching, using a CDN, compressing images, minifying codes, and keeping all the aspects updated.

- Redesign popups by making them non-obstructive and they should be easy to close.

- Enabling AMP to make mobile surfing smoother.

- If the text is too small, there is a 95% chance of incompatibility with mobile devices. So, focus on keeping the text bigger so that readers can read them properly.

Indexing problems

This point is crucial in a technical SEO audit, doesn’t matter if the website is small or if the website is large. Wasting the time of google bots is not a good idea.

The crawling, subsequent indexing, and organic positioning of a web page largely depend on Google’s ability to crawl the page. In many audits that I do, this is one of the main problems that I observe and that brings with it many problems with the organic positioning of the site.

Making bots easier to track and focusing on our website’s essential pages is one of the SEO skills that I like the most. I can assure you how some websites have changed overnight, correcting only this dramatic point quickly.

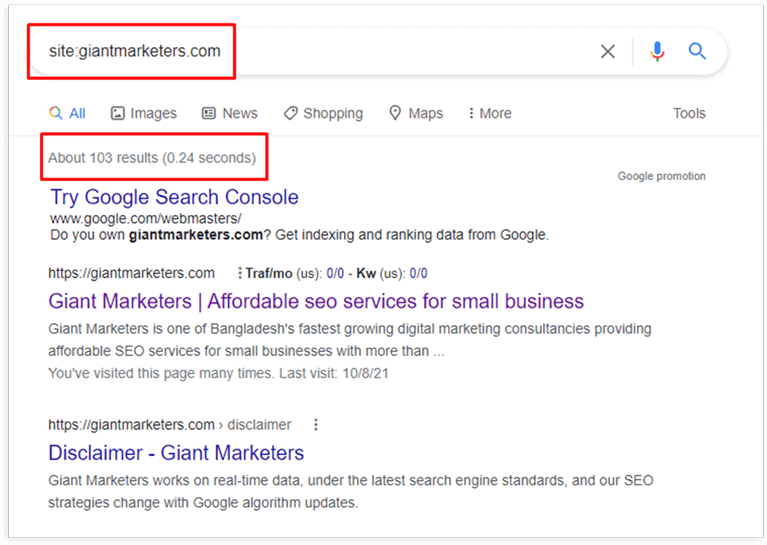

How to Detect Indexing Problems?

The easiest way is to type site:yoursitename.com into the Google search bar. Then, you’ll see how many pages of your site are ranking just like the following image.

Another best place to detect crawl problems is within the Google Search Console.

Inside the section sitemaps.

Here, you can check if there is much difference between the number of URLs sent to google and those actually indexed. The second thing that you must observe: the google index> indexing status. Check if there is a significant drop in the total number of indexed pages, which would indicate that you have a big problem to solve.

How to Fix It?

Now, look out if any pages of your site are missing in the index or not. Are there any pages in the index that you don’t want to be? Then do the following-

- Check subdomains to make sure they’re indexed.

- Confirm that your old site version isn’t indexed instead of redirected mistakenly.

- Figure out the exact reasons what causing indexing problems.

These will lead you to make optimization of the next two points which are the robots.txt file and XML sitemap.

Robots.txt

One of the biggest reasons that ruin your site’s organic traffic is when you forgot to change the robots.txt file after redeveloping your site. Robots.txt file gives you more control over what pages you would like web crawlers to index.

Unfortunately, many of us don’t know to optimize robots.txt files appropriately. So, to determine the issues related to the robots.txt file, simply, type yourdomain.com/robots.txt into your browser bar. If the result shows “User-agent: * Disallow: /”, it means there is a problem.

How to Fix:

Talk to your developer immediately about this matter. Maybe there are some reasons behind this. In contrast, if your robotys.txt file is really complex like e-commerce sites, then you should go for reviewing this file line by line. make sure to do it with your developer.

Meta robots NOINDEX

NOINDEX robots.txt file is even more damaging for a site. A NOINDEX directive is enough to remove all your pages with this configuration out of Google’s index. Mostly, when a site goes through the developing phase, the NOINDEX is set up, and once all is done this is removed from the live site.

How to Fix?

First, view your page’s source code to do a manual spot check, and look out for one of the following-

<Meta Name=”Robots” Content=”noindex, follow”>

<Meta Name=”Robots” Content=”index, nofollow”>

<Meta Name=”Robots” Content=”noindex, nofollow”>

Your most important pages should contain either index, follow, or nothing at all. Also, verify that the less important pages on your web that are indexed, tagged with “noindex”. As a website grows, this point is more crucial so that the page continues to be classified correctly.

It’ll be best if you use a tool to scan tour entire site. So, here are some best tools for you.

Check Your Sitemap

There are two types of sitemaps such as HTML and XML.

The HTML sitemap helps audiences to understand the site’s architecture and find the pages easily as it is written.

In contrast, an XML sitemap guides and helps search engines to crawl a website accurately and understand your site.

When it comes to technical SEO, it is crucial to ensure that all your indexable pages are submitted in you’re site’s XML sitemap. But, often due to incorrect and invalid sitemap pages face indexable and crawling errors. Here are the most common ones-

Empty XML sitemap: It can be due to multiple factors. For example, it may be that the name of some files is incorrect. It is empty or that it is not well labeled.

Either way, you have to look at your sitemap and check to see if it is empty. If not, check the URLs and verify that you have used the correct tags or have misplaced a file.

Compression error: This means that Google could not unzip the file, so you have to go back to your sitemap and check what happened to the compression. For prevention, it is best to re-compress and resend it.

Invalid URL: It is likely that by mistake, you have put some special or unsupported element in your URL: a comma. In this case, you should check all your URLs to determine if you have made a spelling mistake. The best thing to do in these cases is to check your URLs to carefully determine where the error is. When you find the problem, you fix it, update it and resubmit it.

There are a few issues related to XML sitemaps are like-

- Not creating the sitemap in the first place

- Do not include the sitemap’s location in the robots.txt

- Allowing multiple versions of the sitemap to exist

- Do not update the Search Console with the freshest copy

- Not using sitemap indexes for large sites

How to Fix:

At first, use the following commands to see if there’s an XML sitemap or not.

- site:domain.com inurl:sitemap

- site:domain.com filetype:xml

- site:domain.com ext:XML

If there’s no sitemap, then you should create one and submitted to Google Search Console. Besides, if there’s already a submitted sitemap, then, check the number of URLs that you’ve submitted and indexed from your sitemap within the search console. This way, You’ll get an idea about the quality of your sitemap and the URLs.

You should monitor the URLs indexation frequently in XML sitemaps. It is also essential to note that, if you block a page in robots.txt, there is no meaning of having it in your XML sitemap.

Always ensure keeping the high-quality pages of your site in the sitemap and if your site comes in big size with over 50,000 URLs, you should use dynamic XML sitemap.

Broken links

A broken link is a link that takes you to a page that is not working. It is what we know as a 404 error. The entire website has been removed or moved to some other domain without being redirected.

It is not only bad in terms of SEO perspective, but also can harm your site’s user experience. Usually, it causes when there was a change of structure in the permanent links (more common in internal links).

Links to content (PDF, Word, videos, etc.) that have been deleted or are no longer available (for example, Dropbox). Fortunately, there are easy fixes to solve this problem.

How to Check It?

It is a tedious and long task to check every link in every article, page, and even inbound link. In that case, the following tools and plugins can help you out.

Webmaster Tools

Webmaster Tools will not give us a list of broken links but of 404 errors on your website. To do this, you have to go to the “Coverage” section and analyze all the content and errors. You just have to look for the 404 errors and you will already have all the broken links on your website.

Broken Link Checker Plugin

This solution is much more comfortable and provides more information. It monitors all internal and external links of your site to figure out the broken links.

- Detect broken links that don’t work

- Makes the broken links display differently in posts.

- you can directly edit the links from the plugin’s page manually

- You can search and filter links by URL, anchor text, and so on.

Screaming Frog SEO Spider Tool

If you have read or taken courses on niche SEO, you have probably heard or been taught this software to analyze keywords. Well, it also works to check broken links.

Once installed, we are going to analyze a web page. In the main bar, type your URL and click “Start”. Wait for the analysis to finish. When you’re done, on the top bar, find and click the one that says “Response Codes.” A drop-down bar will open, click on “Client Error (4xx)”. Analyze the broken links that it offers you because it scans your ENTIRE site: images, links, resources, 4xx errors, redirects, etc.

How to Fix It?

After detecting the broken links:

- Make a list of them.

- Enter your website and remove the links through the detected source if they are not much essential.

- Replace it using a different source that addresses the same topic you wish to refer to.

Content Structure and Cannibalization

Creating content to drive traffic sometimes comes with an added problem. Poor content structuring brings positioning problems. A bad structure of the web or categorization affects both small webs and large web pages.

This I have verified in many of the technical SEO audits that I have carried out. Time must be spent creating content but also ordering it. Consider this option as if it were a secret weapon that cannot be seen. As the size of the web increases, it is more important to have the content well categorized.

For instance, if new content is created weekly on the blog, try to avoid cannibalization problems between articles. It also often happens that some blog articles take away the ranking of product pages in online stores, which is a problem about e-commerce sales.

You have to be careful with the choice of keywords when you work on a blog and an online store within the same site. The cannibalization of keywords occurs when two or more URLs of a website are classified involuntarily by the same keyword.

I give you a small detail,

It is not always bad to have 2 URLs positioned on page 1 for the same keyword. Keep in mind that Google shows the pages in the SERPs according to the user’s intention. Therefore, it may be the case that 2 pages of the same website are displayed for a search term.

How to Detect It?

To detect if we have keyword cannibalization, we can use a search command as simple as this: Search in quotes for your “keyword.”

site: domain.com “keyword.”

Another example for “Generate organic traffic.”

site: abc.com “generate organic traffic.”

Alternatively, you can use a keyword mapping tool to find duplicate entries. If you see more than one URL of yours in the SERPs, it means that you will have to review those pages because one may detract from the other.

How to Fix It?

The process of solving keyword cannibalization depends on the root of the problem. So, let’s find out the possible solutions.

- Restructure your site by taking one of your most authoritative pages and turn it into a landing page.

- Create new landing pages

- Target the same keywords and combine them into one page.

- Find new keywords and create content-rich pages.

- Make sure to use 301 redirects

Thin Content Issues

Thin content is another of the most common problems, especially in online stores, although it is often the case in blogs. One recent study by Backlinko found that the average word count of content on the first page of Google was around 1400.

Usually, thin content seems as alow-quality content by Google. Having thin content takes away potential from the rest of the domain. Thus, pages with poor content tend to affect the rest of the pages that rank well. It is a technical problem that must be addressed and solved.

How to Find It?

If users enter a page and leave very quickly, it is a bad sign. The content on that page may not be very complete or missing. Or perhaps, the relevant information is not given, and then users go to another website to find it. We can detect it very easily through the SEMrush On-Page SEO Checker.

How to Fix It?

When we have detected which pages hardly get impressions or with a high bounce rate, then we can choose one of the following solutions:

Increase more content to those pages (text, images, video, etc.).

Put a “noindex” tag on the pages that are of little real value to classify. In this way, the potential of the main pages will be increased.

Check Internal Link

Not having internal links that pass authority from one web page to another is finding a possible indexing problem plus not taking advantage of this SEO technique to grow organic traffic.

Internal links are currently of great importance for search engines, and my advice is that you do this task in each of the URLs or pages of your website. Some small websites do not use internal links to transmit equity from one page to another, losing a significant part of their organic potential.

Linking articles to each other or doing it from the blog to the online store adds extra value to google and the ranking of keywords. In fact, it can have a huge impact on your site’s crawlability from search spiders.

How to Find It?

For small websites, it can easily be done manually by visiting each of the pages and checking if there are internal links between the pages. For larger websites, more precise tools will be needed, such as Screaming frog SEO spider.

How to Fix It?

Placing internal links from blog posts to the main pages you want to rank is a great SEO strategy. The same can be done for online stores. In addition, it is necessary to take advantage of the internal links to place the keywords in the anchor texts of the hyperlink.

The key is to use different anchor texts naturally. This will transmit even more power to the landing page.

Structured Data or Schema Markup Problems

To tell you the truth, most audited websites lack data markup. Webmasters do not usually use this google system to make the search engine understand much better what each page is about.

Within SEO optimization, data markup can boost the ranking of keywords since it helps the search engine understand much better what each of the pages of a website is about. According to google, schema markup helps to classify a web by delivering rich snippets.

How to Find It?

Use the Search console to detect if there are data markup errors or the page is missing from them. Navigate through search console:

- Appearance in the search engine

- Structured data

You can test it on Google’s structured data tool.

How to Fix It?

To fix this problem, use the same error detection tool to find out where the code problem is and to be able to solve it. But, first, go through this Guideline for structured data implementation.

If you use WordPress, you can use a plugin to make this task easier like this one- The all in One Schema plugin.

With it, you will get the Rich Snippet or a brief summary of the page in Google, Yahoo, or Bing’s search results.

If you use a different CMS, you may need help from a programmer to implement it. You have to know that Google prefers the following formats:

- JSON-LD (recommended)

- Microdata

- RDFa

Implementing this point can be competitive and many web pages lack this complement.

Breadcrumbs for smooth navigation

Breadcrumbs provide one-click access to any pages without having to go back several times with the browser button. If your website is multi-layered, then you can consider implementing breadcrumb navigation to make it easier to navigate.

Breadcrumbs can even appear in the search result in a special format. They improve the user experience, reducing the bounce rate and increasing the time spent on the website. Both factors affect a page’s ranking in searches.

How to Activate It?

Follow these three ways to activate the Breadcrumbs:

- Custom code. It consists of editing the .PHP files of our website and including the code instructions that show the crumbs’ trail for each page. Requires knowledge of the WordPress programming API and its architecture.

- Unsupported Breadcrumbs theme. If the website has a theme that is not prepared to include breadcrumbs, a WordPress plugin can be installed with this feature. However, it requires editing some .PHP files and including the lines of code, following the plugin manual’s instructions.

- Theme with support for Breadcrumbs plugins. The fastest and easiest solution. It is enough to install the plugin with which our theme is compatible and configure it to have the appearance and functionality that interests us.

Best Practices Related to Breadcrumb

Like any element, there’s a right and wrong way of doing it. Here, are some best practices to ensure you’re creating the most effective breadcrumb navigation for your users.

- You should only use it if the breadcrumb navigation makes sense to your site structure.

- Do not make it too large.

- Make sure to include a full navigational path.

- Keep the title consistent with your page titles.

- Keep it clean and uncluttered.

Check Broken Backlinks

Backlinks are still one of the main SEO factors that Google takes into account to give authority and trust to a website. Google provides a different score for each backlink according to its criteria.

But, often, some of the top pages may have 404 pages after a migration. This results in, pointing back to these effectively broken backlinks 404 pages.

How to Find It?

To find out if you have broken backlinks or not, you can use the following tools:

How to Fix It?

Once identifying the top dead pages, make 301 redirect these to the best pages. Also, look for if the linking site typed your URL wrong or messed up the code. Make sure to set a recurring site crawl to look out for new broken backlinks.

Final Thoughts

These Technical SEO issues play a significant role in your site performance and rankings. If you have found and fixed the technical issues, you will see a good outcome quickly.

Technical SEO allows you to obtain a competitive advantage. Therefore, focus on these problems and start fixing them with the help of this guide. I hope, now you’re ready to fix them and make the most out of the technical SEO.

So, tell me which of these SEO issues are you facing currently? In fact, you can comment below if you have any questions regarding this guide. We’re always there to help you out.